It is only fitting that the Edmonton's Public Library host the ODD2015 Hackathon (on 21 February 2015), as the Library stands for three of the main themes of this years hack, and events leading up to that hack: (1) Inclusion; (2) Civic Engagement, and; (3) Literacy.

Inclusion

The Edmonton Public Library is a space held in trust for all of Edmonton's citizens. It provides equal access, and does't care which community you belong to, or how you self identify. To reflect this level of inclusion, and to push back on the culture of exclusion seen in so many tech industries (think GamerGate and Open Street Map), the #ODD2015 Hackathon is instituting the following Hacking Code of Conduct:

Our hackathon is dedicated to providing a harassment-free experience for everyone, regardless of gender, age, sexual orientation, disability, physical appearance, body size, race, ethnicity, nationality, religion, previous hackday attendance or computing experience (or lack of any of the aforementioned). We do not tolerate harassment of hackathon participants in any form. Sexual language and imagery is not appropriate at any hackathon venue, including hacks, talks, workshops, parties, social media and other online media. Hackathon participants violating these rules may be sanctioned or expelled from the hackathon without a refund (if applicable) at the discretion of the hackathon organisers.

Civic Engagement

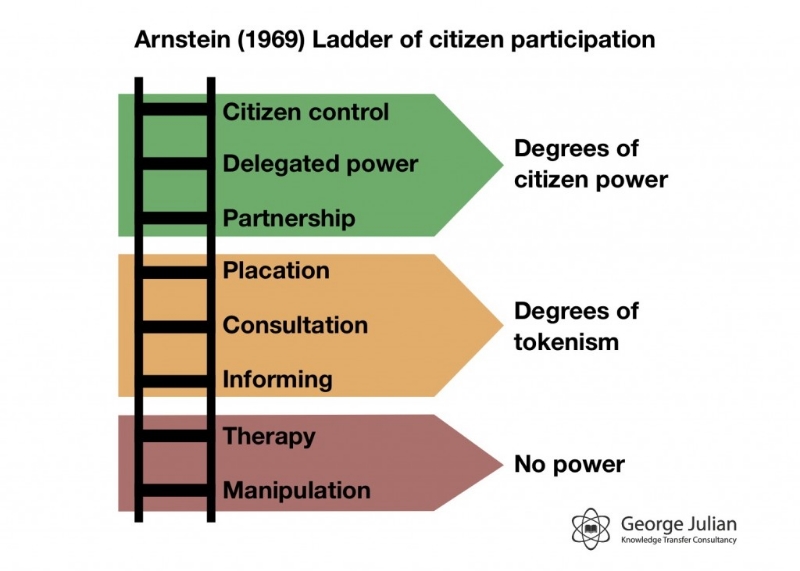

Arnstein's Ladder of Citizen Participation describes the relationship between government and citizens. At the bottom of the ladder, citizens are manipulated and have no power. At the top of the ladder, citizens and governments are indistinguishable. While I would not hazard to guess where we sit relative to the City of Edmonton or the Government of Alberta, I do know that Open Data and hacking serves to move citizens up the ladder.

As we learn to use the tools hacking, and by this I don't just mean coding, but other tools such as data scraping, design, networking & outreach, generally organizing around the building of something, we are more engaged with each other and more likely to push back against government policy.

In the best case, we are provided the data with which governments make decisions such that we can draw our own conclusions.

Remember:

Real change comes from the bottom up. Innovation occurs at the interface of diverse minds and perspectives. Collaboration is hard. It requires stepping beyond comfort, into the unfamiliar. It’s worth it.

Literacy

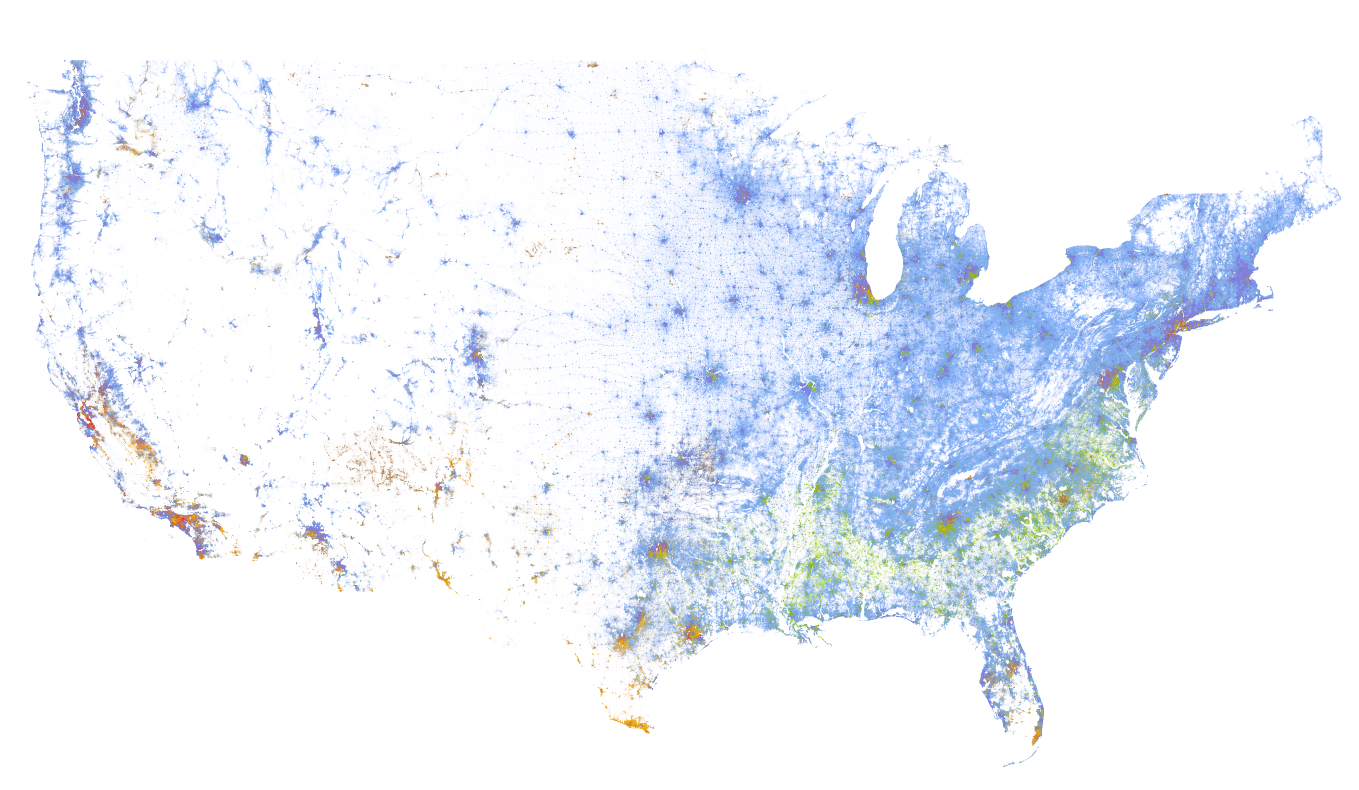

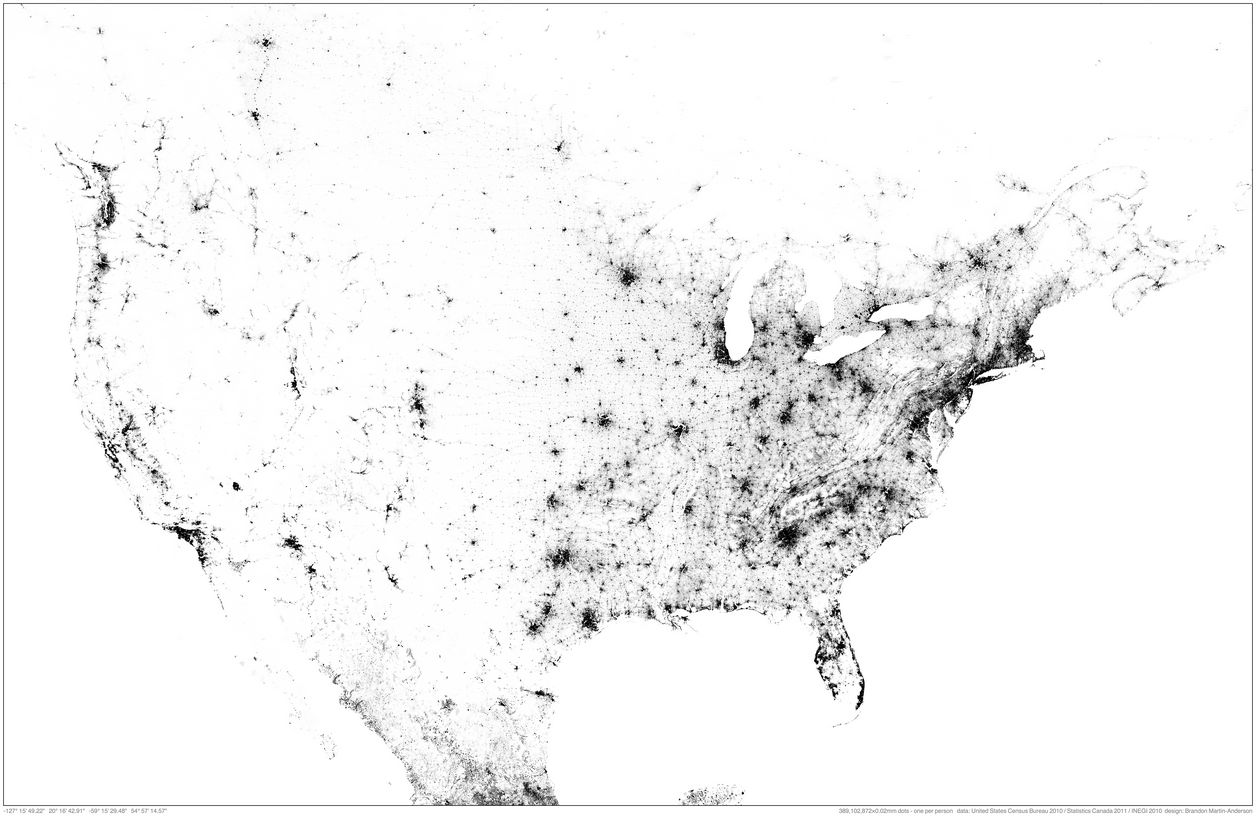

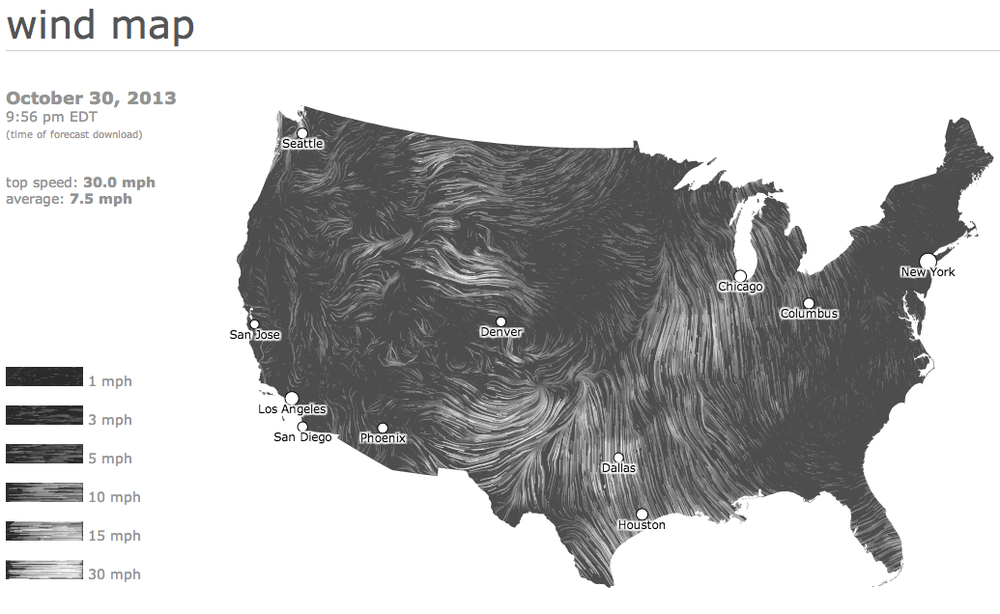

Finally, libraries are all about literacy. And so are Hackathons; only it's call numeracy in the data context. If you learn one thing at a Hackathon, it should be not to be afraid of data, and don't worry, because you can't break it. Play with the data, and start exploring how to use your spreadsheet. If your an expert at Excel, download QGIS and start playing with an open source and free GIS. Think about the data, and the stories it might tell, and how it might address your frustrations with the Government process.

Resources

Finding a place in tech without writing a line of code.

Open data resources from Canada and around the world

Datalibre.ca: A blog which believes all levels of Canadian governments should make civic information and data accessible at no cost in open formats to their citizens.

Map school offers an introduction to maps and map making.

The Elements of User Experience describes the design process to build good software. There is a lot of non-code that goes into software, and this website/sample chapter provides good insight.

Open Data Tools offers a range of tools to help understand the open data sets that you may be working with.

I use AimerSoft PDF Converter Pro, in conjunction with ScanSnap to convert either paper or PDFs to excel data tables.